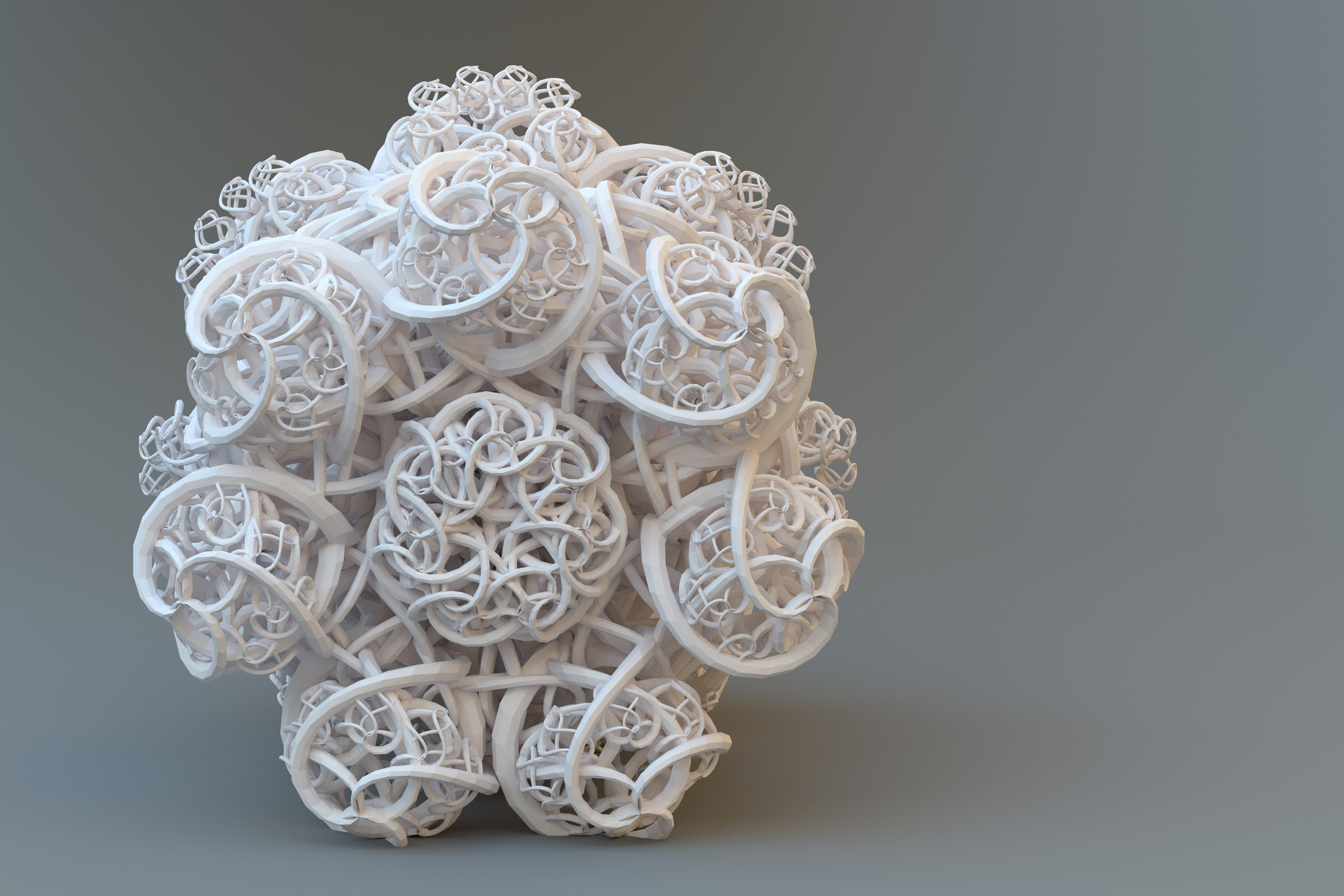

Animation by kastanka for iStock

Machine learning will extract engineering principles from biological complexity and generate new molecules, gene and cell therapies, and medicines we have yet to imagine.

Important scientific advances mostly occur when a discipline graduates from expensive guesswork to systematic engineering based on a set of well-understood principles. In physics, for example, scientists first explored and uncovered the characteristics of electron mobility, including features like the dependence of mobility on an electric field and the inherent conductivity of metals. Soon after, we formulated the equations that accurately predict such phenomena, which in turn allowed us to control electrons and build complex systems, such as transistors. This precise control of electron mobility was a key advance that marked the beginning of the digital age.

Almost every scientific and industrial revolution can be traced to a similar transition. As far back as the Carnot cycle and the innovation of the steam engine fueling the industrial revolution, the moment a set of principles can be applied predictably and reproducibly to engineer a useful system, entirely new fields are born.

Biology, in contrast to physics, is governed by highly complex and often stochastic rules that do not easily yield a small, parsimonious set of principles that are readily understood.

But the intentional and controllable engineering of biological systems has largely eluded us. Without a set of well-understood principles upon which to create novel systems, we are left with discovery. Almost all modern medicines have been discovered or systematically identified from an existing set of biological solutions, rather than intentionally generated for a given purpose. This is because biology, in contrast to physics, is governed by highly complex and often stochastic rules that do not easily yield a parsimonious set of principles that are readily understood. Maybe evolution has not equipped our brains to understand how millions of minute statistical patterns lead to the complex behavior of the cell. But a new kind of intelligence is emerging that excels at this kind of high-dimensional probabilistic reasoning: machine learning. It seems that machine learning can reveal the engineering principles of biology that have hitherto been hidden. These hidden principles, once uncovered, can be used directly for machine generation of novel and potent biological molecules.

What if machine learning can generate novel biology?

Historically, much of our biological knowledge was discovered through experiments to understand how a given perturbation affects the properties of that system. With each new observation, we updated our world view, formulated new hypotheses, and tested our predictions. This empirical process of discovery underlies the scientific method, which hasn’t evolved much since it was first proposed by Francis Bacon in the 1600s.

In biology, this process results in a systematic cataloging of evolutionary history and the solutions it has produced. In order to create novel biology, inaccessible to evolutionary processes, we need to know the generalizable principles by which biological systems function. However, we often cannot write down such equations or principles, and we even struggle to identify the basic unit of biology on which to operate. Since the first proposal for standard biological parts, we have attempted to define the elementary building blocks of biology that can be predictably combined to produce complex biological systems, analogous to the electron and semiconductor for a transistor. This extensive reductionist effort has revealed a breathtaking amount of complexity, and has ultimately stymied our ability to find generalizable principles and engineer medicines of the future at scale.

If the generalizable principles required for engineering do exist, yet remain elusive due to the immense and stochastic complexity, machines are perfectly poised to master this challenge.

Machine learning can extract the hidden principles of biology

Machine learning has revolutionized fields including computer vision, natural language processing, speech recognition, medical imaging, and computational biology over the past decade. The advent of large data sets coupled with advances in processing have made it practical to train enormous neural networks. These neural networks, which are said to employ deep learning because of their many-layered nodes, are accomplishing tasks that were until recently thought to be too difficult for computers to tackle—for example, winning the ancient Chinese game of Go. In a pivotal development in 2016, a deep learning system created by Google DeepMind, AlphaGo, defeated Go champion Lee Sedol in five matches in Seoul. Some of the system’s decisive moves remain difficult for Go masters to understand, even with the benefit of hindsight. In the year following, DeepMind developed AlphaGo Zero, a deep learning system that started out knowing nothing more than the rules of the game and was otherwise completely self-taught. The new system quickly learned and discarded strategies that humans had discovered and honed by humans over millennia, and within 36 hours, its abilities surpassed those of the system that had beaten Sedol just a year earlier.

When a problem is correctly framed in terms of machine learning and the right mixture of data and computing power are available, long-standing and deeply-held beliefs of a field can be quickly overturned.

These advances are beginning to change the future of biology. For example, DeepMind’s AlphaFold won the most recent biennial CASP protein-folding contest, and it did so by an unprecedented margin. This in many ways parallels the AlphaGo story: When a problem is correctly framed for machine learning and the right mix of data and computing power is available, a field’s longstanding and deeply held beliefs can be quickly overturned. Although AlphaFold today relies on critical pieces of hard-earned human knowledge, it’s easy to imagine that as data increases and algorithms advance, we will see a similar progression in biology as was observed in the progression from AlphaGo to AlphaGo Zero. Artificial Intelligence pioneer Richard Sutton calls this “The Bitter Lesson.” We will likely continue to learn this lesson in field after field of science.

Besides structure prediction, machine learning is addressing other fundamental questions about proteins. Although proteins serve remarkably diverse functions across the tree of life, generalizable features can be extracted not only to predict structure but also to generate specific desired structures and functions directly from sequence. These capabilities have resulted from an explosive increase over the last decade in the number of protein sequences available from which to extract the required generalizable hidden principles. After these principles are discovered throughout the protein universe, they can become the foundation for generating specific desired protein functions.

Once machine learning cracks the code that links a protein’s sequence of amino acids to its structure and function, applying models to directly generate proteins never before seen in nature is inevitable. It will be a transformative moment in the history of biology and medicine, and it will unlock therapies previously only imagined in the realm of science fiction. Like Go and protein structure prediction, machine-generated therapies will likely have counterintuitive and difficult-to-understand properties. Just as machine learning has forever changed the understanding of how Go is played, our forthcoming ability to generate endless therapies across the spectrum of human disease will revolutionize the field of medicine.

Generative biology will move medicine past the previous era of discovery

Today, drug discovery is an expensive process of trial and error, which is only getting more costly and inefficient over time. Over the last 60 years, we have seen a steady decline in successful drug discovery—the inverse of what Moore’s law has done for computing, creatively termed Eroom’s law. The exponential rise in inefficiency of drug discovery has contributed to an explosion in the cost of developing drugs. The costs of commercializing a genuinely new drug have swelled from approximately $800 million in 2001 to more than $2.5 billion today. These mind-boggling numbers highlight the unpredictable nature of drug discovery and development. Years of experience and insight have not allowed us to predict what molecules will serve what purposes. Further, although the increase in high-throughput technologies has suggested that a brute-force approach of randomly creating and screening more molecules might solve the fundamental problems of a discovery paradigm, in fact this mindset has led to more costly and ultimately less productive discovery efforts.

Fundamentally, contemporary drug discovery is finding molecules that already exist or that nature has already evolved—a solution space that is severely limited and not fit for purpose. Proteins are an example: For any given amino acid sequence, the probability that nature has sampled that specific molecule at any point in the history of life is approximately 10-100, equivalent to less than a drop of water in all of Earth’s oceans. Beyond the incomprehensible search space, the goals of modern medicine and evolution only rarely align.

Despite all the challenges with the discovery paradigm for medicines, it has scarcely changed for a hundred years, even as the targets for therapy have changed and evolved. For example, gene therapy—treating genetic disorders by directly inserting a healthy gene into a patient’s cells—once seemed as if it would be a straightforward application of existing genetic understanding. But it has proved to be exceptionally difficult in practice. The reason is simple: Even though we clearly understood how to cure the disease, we lacked the tools to deliver nucleic acid cargo inside the cell. It wasn’t until we discovered natural machinery that evolved to accomplish this goal that the promise of gene therapy was realized. Today, approved gene therapies rely on the natural biological machinery of adeno-associated virus (AAV), which is only slightly altered from its natural form and not at all tailored to its new therapeutic function. By extracting the hidden principles by which this highly complex biological machinery functions, we could move past these inherent challenges and directly generate gene therapies that both are safer and expand the reach of diseases and cell types we can treat.

Bringing the vision of generative biology to life will by definition require the categorical merger of scientific and technological expertise.

Many of the most powerful medicines of the first half of the twentieth century, such as insulin, are direct copies of what nature created. More recent medicines have tended to involve relatively minor changes to naturally derived molecules and been minimally tailored for therapeutic use. A widespread example is monoclonal antibody drugs, which have made up more than half of all drug approvals since 2015. The immune system naturally produces antibodies to recognize, bind, and neutralize specific biological targets. In order to be potent enough to serve as a therapeutic, however, antibodies must have much stronger binding than the immune system requires. In order to overcome this challenge, scientists start with the design of a natural antibody produced by the immune system and deploy directed evolution to increase affinity, making mutations to an existing antibody, selecting for variants with the highest function, and repeating. Medicines developed through this hijacked version of evolution remain few, and the technique increases the search space only minutely compared with the ocean of possibilities.

It is unrealistic to believe we can rely on nature’s medicine cabinet forever to serve humanity’s growing and diverse medical challenges. We must develop the capabilities we will need to generate never-before-seen therapies that are tailored to human needs.

Generative biology is poised to transform medicine

At Flagship Pioneering we believe that the transition from drug discovery to what we call generative biology will constitute a sea change in life sciences. No longer will we be discovering suboptimal medicines that nature evolved for its own purposes; instead, we will be generating purpose-built medicines. A generative approach also promises to parallelize drug discovery in unforeseen ways.

Generative biology is now a realistic goal. The decrease in cost of both high-throughput DNA sequencing and DNA synthesis, the exponential increase in computing power, and the rapid advancement of machine learning algorithms will together transform medicine. Therapeutics will no longer be single molecules but more complex systems, such as viruses and entire cells. Machine learning is poised to extract the necessary engineering principles at each layer of biological complexity and ultimately generate not only novel molecules but also next-generation gene and cell therapies—and medicines we have yet to imagine.

Bringing the vision of generative biology to life will by definition require the categorical merger of scientific and technological expertise. Creating Flagship's generative biology companies will require innovations in machine learning to move beyond the prediction of existing molecules to the generation of novel molecules. It will also require new perspectives on biological data that maximize the ability of a machine to extract the underlying hidden principles. Working at the intersection of biology, machine learning, and engineering, scientists from diverse viewpoints will need to collaborate in new ways, inventing and innovating together. At Flagship, we are building cross-disciplinary teams of experts to take on this mission. We aspire to a future in which chance and unpredictability in drug discovery give way to intentionally and controllably generated biology and medicine.

If you see an error in this story, contact us.