genetic chemistry

: the use of genetic code to discover the foundations of small molecule medicines

Polyintelligence

by Noubar Afeyan

“Nature is the source of all true knowledge. She has her own logic, her own laws, she has no effect without cause nor invention without necessity.”

These observations from Leonardo da Vinci are powerfully reinforced in Ken Burns’ Leonardo da Vinci, a documentary on PBS that captured my attention and led me to ponder the notions of knowledge and intelligence. This deep dive into the life and history of the quintessential “Renaissance man” showcases not only his genius but also his obsession with nature. Though he lacked formal education, his innate curiosity drove him toward a constant, intensive observation of the world around him. Nothing escaped his interest: water, soil, birds, human social behavior. Nature was his teacher, guide, and inspiration.

Leonardo’s appetite for learning was wide-ranging but not scattered. What seemed to fascinate him most was not simply mastery of discrete domains, but the interconnection among disciplines. His holistic, integrated approach to analyzing the human body, designing machines, observing water, and painting enabled him to produce work that was greater than the sum of its parts — work that inspires awe even centuries later.

The term polymath is fitting for his unique intellect: someone with insatiable curiosity, a capacity to synthesize information from diverse areas, and a knack for connecting seemingly unrelated concepts to create groundbreaking ideas. Polymath seems to only suitably describe human intelligence, but if Leonardo teaches anything, it is that nature is intelligent, too, providing a wellspring of innovation and wisdom.

With the rise of artificial (machine) intelligence, we are entering a new chapter — a synthesis of human, machine, and nature’s intelligence. This synthesis, which I term polyintelligence, represents the emergence of a modern-day renaissance: one in which human intelligence and nature’s intelligence will adapt to integrate and co-exist with artificial intelligence, human intelligence and artificial intelligence will inform and be informed by nature’s capacity for problem solving, and machine intelligence will decode nature to serve up solutions to allow humans to thrive. Polyintelligence powerfully combines human creativity and imagination, nature’s adaptive genius, and the reach, scale, and speed of machine intelligence.

Watch and listen as Flagship General Partner Avak Kahvejian describes how the field of biology is transforming from a science of observation to a deterministic and programmable endeavor.

Viruses evolve at a rapid pace within the human body to evade immune defenses, refine their interactions with cells, and adapt to shifting conditions, generating a diverse array of proteins that effectively manipulate our biology. What if we could repurpose these molecular tools designed to perturb biology to expand our repertoire of therapeutics?

“Going viral” has become a familiar phrase, describing how ideas and memes spread at lightning speed. But long before social media co-opted this concept, actual viruses were perfecting the art of rapid dissemination.

Viruses have a simple job: survive and replicate. But they can’t do that job on their own, forcing them to seek a host and enmesh themselves into the intricate machinery of that host’s body. In pursuing their goals, they evolve at a staggering pace, generating a diverse array of molecular tools that manipulate human biology — proteins that enable them to evade immune responses, reprogram cells, and fine-tune complex systems to ensure their spread. Over billions of years, this relentless evolutionary pressure has forged a resource of immense value: a comprehensive viral proteome containing millions of proteins uniquely suited to modulate our biological processes.

From a therapeutic perspective, viral proteins represent a pre-optimized blueprint for developing new medicines. Because viruses have explored numerous strategies for modulating human biology, they have developed unique insights humans have yet to discover or leverage. Over billions of years, they developed proteins with extraordinary potency, specificity, selectivity, and stability, often achieving highly miniaturized forms that fly under the radar of the host’s immune system. Beyond just evading immune surveillance, viral proteins have also acquired entirely novel functions that intricately modulate human biology. These proteins don’t just target one isolated mechanism in the cell; instead, they coordinate or modulate several complementary pathways that reinforce one another’s effects. This allows for a more integrated and potent influence on the host’s biological systems, which could be leveraged to inform the design of more effective means to drive homeostasis — one of the most challenging hallmarks of biology to replicate. By tapping into the evolutionary strategies that produce these remarkable attributes, we can repurpose proteins designed to perturb biology into instruments to heal dysregulated biology.

At Prologue Medicines, we are applying AI to systematically reveal the brilliance of the viral proteome and develop therapies to tackle disease in ways that were previously unimaginable. We are transforming viral proteins from adversaries into collaborators to expand our therapeutic toolbox. Prologue exemplifies the new era of polyintelligence where the boundaries between nature, human insight, and machine intelligence blur, ushering in a future where we co-create solutions to the world’s most pressing health challenges.

Humans have long harnessed imagination to expand the horizon of possibilities. Now, artificial intelligence could augment this capacity, opening a new frontier of innovation and discovery.

Humans have evolved the capability to transcend the world our senses perceive and conjure alternate realities seemingly out of thin air. We call this capability imagination.

Like everything that makes us human, imagination is the result of evolution — a process geared toward advancements that favor survival — standing to reason that it is essential. By enabling us to envision potential outcomes, anticipate uncertainties, and adapt our strategies, imagination enhances our ability to navigate complex environments and challenges.

In our work at Flagship, it is imagination’s ability to birth transformative ideas that we most prize, liberating us from the constraints of reason to reconsider tomorrow’s possibilities. We call our approach emergent discovery — a stage-gated and systematic process akin to Darwinian evolution. Through a structured process of intellectual leaps, iterative search and experimentation, and selection, we pressure test ideas until they cannot be disproved. The seeds of each innovation — from microbiota-based therapeutics, mRNA vaccines, and redosable genetic medicines to more-sustainable farming practices — are planted when we untether our thinking from dogma and “reasonableness” to imagine alternative futures proposed as often far-flung what-if questions.

Our ability to systematize breakthroughs suggests genius is not necessarily a requirement, and that the imagination from which these ideas are born is perhaps not as enigmatic as we have come to believe. Through artificial intelligence (AI) we have begun to augment tasks that require human logic, reasoning, and precision — from summarizing meeting notes to twisting strings of amino acids into protein structures. AI is adept at simulating vast scenarios and making complex connections between concepts — capabilities central to human imagination. Perhaps imagination, this mysterious product of the human mind, is not so elusive or even exclusive to our minds. What if we could engineer a creativity copilot to augment our imagination and creativity?

At what point does imagination emerge? Computer scientist and philosopher Judea Pearl’s Ladder of Causation offers a compelling framework to explore this emergence by providing structure to human and machine reasoning. His three-level hierarchy — association, intervention, and counterfactuals — illustrates how humans move from basic observation to the sophisticated counterfactual reasoning that defines imagination. The first level, association, involves recognizing patterns and correlations — a domain where AI excels through its ability to process vast amounts of data. The second level, intervention, is where imagination begins to emerge as we move beyond passive observation to relax prior beliefs and actively test scenarios, akin to the way we explore what-if questions at Flagship. Finally, the top rung, counterfactuals, is where imagination thrives, enabling us to envision alternate realities and outcomes that diverge from what has actually occurred. The challenge, then, is to recapitulate these features computationally.

How could we steer AI from the first tier (where it already excels) towards tier two and three? At a high level, we believe we can enable AI to increase the number of states it explores during a search to recognize patterns and potential correlations by expanding the range of possible outcomes. For instance, when AI systems like generative models are allowed to explore a broader sampling distribution, they can generate more diverse solutions, similar to how the human brain imagines various potential outcomes by considering different scenarios and timeframes.

There are several different ways in which this could be done. One common method is to adjust the "temperature" parameter of AI outputs, but we and others have been exploring interesting alternatives as well. Initially, such “relaxation” of prior beliefs should allow the AI to better simulate hypothetical scenarios to explore what-if questions, akin to experimenting with actions to predict their outcomes. As the sampling distribution widens, we expect that AI will increasingly emulate the human imagination process, allowing for diverse solutions that reflect human-like brainstorming.

We can push this idea even further to allow the model to distribute probabilities more evenly among possible outcomes. This enables less likely options to be considered more, thus enhancing the novelty of outputs beyond conventions. For example, when prompted with "The pizza was ... ," the model might now output "The pizza was a vibrant swirl of neon colors," rather than the more expected responses.

As the AI iterates and learns, it can update the embedding space, a matrix that maps related concepts closer together and dissimilar ones further apart. As the embedding space remodels, novel relationships emerge between previously distant concepts, mimicking the counterfactual reasoning and imaginative associations that spur innovation.

Those more familiar with AI may have read the above with alarm, identifying methods that could force an algorithm toward incorrect, nonsensical, and entirely fabricated outputs that ape plausibility. We call such outputs “hallucinations,” derided as a major fault that underscores the unreliability of AI.

So-called hallucinations represent the inklings of valuable unconventional outputs that illustrate the potential for counterfactual reasoning: "What if the world were different?" When these outputs align along Pearl’s ladder, they could offer valuable unexpected combinations of concepts, rather than simply nonsense, that a human might not naturally consider, and they can do this at scale, generating thousands of potentially novel ideas. The challenge the becomes: How do we identify the diamonds in the rough?

In biotech, we already use AI at the association level to, for example, identify patterns in genetic data, such as correlations between specific gene expressions and disease resistance. We can also model the impact of altering genes at the intervention level, simulating edits or silencing sequences to predict enhanced resistance. But what could be mistaken as mere hallucinations at the counterfactual level could spark breakthroughs. For example, hallucinated ideas about synthetic genes or novel pathways could inspire innovations that transcend conventional biology, offering new directions for therapies or vaccines.

Just as the microbiologist Alexander Fleming recognized the potential in his contaminated bacterial cultures, following a line of reasoning that led to penicillin, our interpretation of hallucinated data is just as important as its generation. By embracing these peculiarities, we can discover unique solutions or pose scientific hypotheses that push the boundaries of traditional thinking. This creativity copilot could be particularly valuable where fresh perspectives and unorthodox approaches are key to breakthroughs, offering a form of artificial serendipity that enhances human capabilities.

Causal intelligence appears to be a defining trait that sets humans apart from other species as we currently understand them. It underpins our ability to imagine, innovate, and transform the world around us by expanding the frontiers of knowledge through the act of science — an act that has been historically human. We collect knowledge, recombine, extend, and verify it — steps for which AI is advancing to become proficient. We see the potential to integrate AI into our emergent discovery process to help generate the types of far-flung hypotheses that lead to breakthroughs in human health and sustainability.

Flagship is developing AI to aid both reason and imagination, enhancing our ability to embrace and mine complexity and move away from reductionist science that has limited biology for years. Artificial intelligence and augmented imagination are the two AIs we foresee enabling bigger leaps into the future.

Discover how Flagship is advancing the boundaries of AI through our Pioneering Intelligence Initiative.

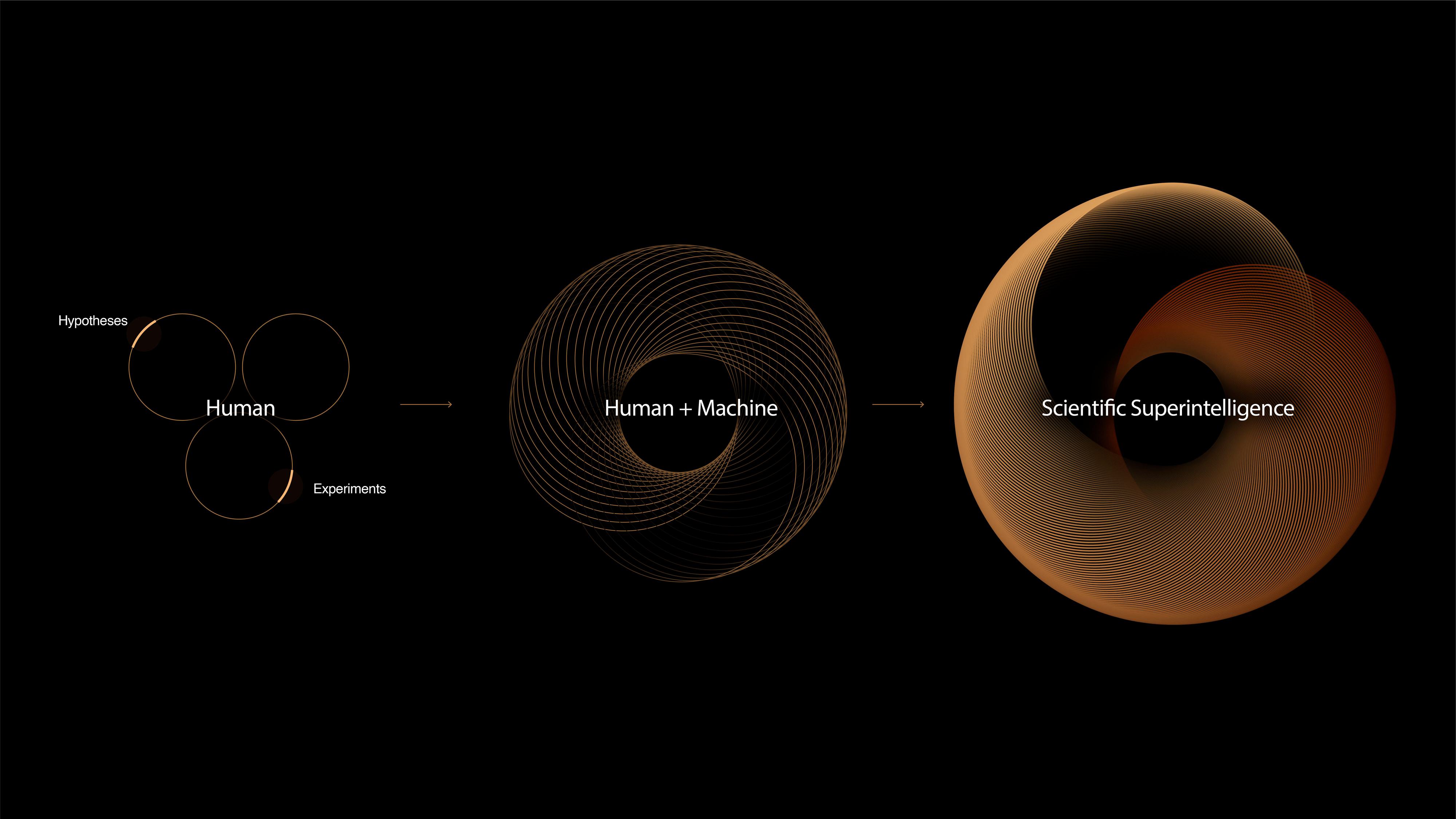

Achieving Scientific Superintelligence capable of driving the scientific method could pioneer knowledge that resides vastly beyond existing human understanding. By building AI to conduct all aspects of experimentation and discovery, we can unlock the ability to massively accelerate solutions to humankind’s greatest challenges.

Humans have been the primary architects of scientific discovery — observing the world, forming hypotheses, designing experiments, and extending our collective knowledge of the universe. The future of science, however, is beginning to shift, as machines trained on millions of scientific papers, genetic sequences, and chemical structures begin to integrate into the scientific method.

When we began applying generative AI to life science in 2018 at Flagship, we saw the potential for machines to learn the language of proteins from hundreds of millions of protein sequences and structures in Nature. Today, such AI systems can generate novel therapeutic proteins with precise biological functions, supporting our founding hypothesis and creating a new form of polyintelligence — the seamless fusion of natural, human, and machine intelligence.

This led us to imagine an intelligence that doesn’t just learn from existing knowledge but creates its own — venturing into territories neither human minds nor natural evolution have explored. To unlock this new form of scientific intelligence, we needed to reinvent the most valuable wheel that has ever existed: the wheel of the scientific method — creating AI systems where AI can hypothesize, experiment, play, and learn. AI agents wouldn’t just analyze experiments but actively design and execute them at scale.

We believe this scaled learning will enable the emergence of a new form of machine intelligence: Scientific Superintelligence — a higher level of scientific intelligence than either existing human or natural intelligence. In this paradigm, AI becomes the primary driver of the scientific method — autonomously refining its understanding of the world. This shift makes it possible to create new knowledge at exponential scale, uncovering solutions that lie beyond the limits of human cognition or existing datasets.

Achieving Scientific Superintelligence is central to realizing the full potential of polyintelligence. This synergy will allow us to illuminate solutions Nature has already devised through evolution while pushing the boundaries of discovery through AI’s capacity to explore, test, and learn beyond the frontiers of current science. Together, this fusion of intelligences unlocks a new era of discovery, accelerating innovation at a pace and scale never before imagined.

Scientific Superintelligence will impact everything — from pioneering novel medicines to reimagining carbon capture, advancing sustainable agriculture to addressing national security challenges. Centuries of scientific progress will compress into decades, revolutionizing what is possible in the discovery of knowledge.

For more on this reimaging of what science can become, visit Flagship-founded Lila.

Small molecules make great medicines, offering significant benefits to patients and society. The challenge is that the universe of possible drug-like compounds is so large it may as well be infinite to drug hunters. This historically made the discovery of good starting points — safe, disease-relevant chemistry — an unpredictable, lengthy, and difficult process.

Biosynthesis, in which organisms build complex molecules from simpler ones, is a fundamental feature of life. In addition to larger molecules like proteins, cells produce small molecules via enzymatic proteins that build or modify bioactive chemical compounds. As a result, programs for important chemistry can be discovered in genetic code.

Microbes are the original masters of chemical biosynthesis and those found within the human body have generated (and tested) a universe of small molecules that interact with every protein and conceivable drug target — the result of over 300,000 years of co-evolutionary experimentation across billions of human lives.

The most logical place to look for better medicines to safely treat human disease, it turns out, is within the human body. Eons of nature’s experiments have encoded programs for thousands of conserved, drug-like compounds against human disease targets into microbial genomes (i.e., the metagenome). Until now, we have simply lacked the tools and data to systemically access this evolutionary database.

Now, Empress is bringing the power of genetics to small molecules. Using artificial intelligence to interrogate and compare datasets of metagenomes from healthy and diseased individuals, Empress can pinpoint unique biosynthetic genes and pathways responsible for creating specific chemical compounds important to human health as well as their related targets and disease relevance. By leveraging genetic chemistry, Empress is working to increase not just the speed, but certainty, of small molecule drug discovery.

Global food production relies on over three billion tons of chemical pesticides annually. These harmful residues contaminate our waterways, soils, and food, posing risks to workers, consumers, and wildlife, including birds, marine life, and beneficial insects. The challenge is to sustain or even boost food production while drastically cutting down on chemical pesticide usage.

Nature has devised sophisticated defenses. Fungi, plants, bacteria, and insects have evolved natural ways to protect themselves from threats. Grapevines, for example, produce natural defensins — peptides that can fight off certain fungal infections. These natural defense systems are not only effective but also targeted and biodegradable.

Invaio is decoding the complex relationships between natural molecules, crop systems, and the environment. Using cutting-edge technologies enabled by artificial intelligence, Invaio identifies naturally occurring compounds and then designs bioactives based on their properties with improved qualities. These molecules are coupled with programmable delivery systems that precisely direct them where they are needed most, then naturally biodegrade, ensuring maximum effectiveness with minimal environmental impact.

Invaio’s proprietary approach can rapidly identify and develop novel biological crop protections that both boost crop resilience and improve overall environmental health. These innovations will provide farmers with sustainable, nature-based alternatives to chemical pesticides, enhancing the long-term health of millions of acres of farmland.

Early detection of cancers can dramatically decrease the cost of treatment and increase survival rates, but current models for routine screening are often expensive, inaccessible, and predominantly rely on outdated guidelines. Meanwhile, the majority of cancer diagnoses occur at later stages, making treatment more complicated, expensive, and with increased mortality rates. Moreover, many available screening tools for monitoring and early detection do not provide the accuracy and reliability needed for broad clinical adoption and application.

Our bodies communicate in a complex language of signs and signals about our health. If we can become fluent in this language, we can identify indicators of disease states (i.e., biomarkers) understand their implications, and take action. This biological information manifests even during the earliest cellular changes of cancer. These biomarkers signify the inception of cancer, before it takes root and symptoms have even materialized.

By synergizing profound insights into the biology of cancer's origin with the latest artificial intelligence and genetic screening technologies, Harbinger Health is extending detection timelines back to the inception of cancer, before symptoms emerge. Instead of focusing on the biomarkers of late-stage disease, Harbinger is working to identify and decode biological programs present at cancer's earliest cellular changes, shared across various cancer types. This ongoing innovation dramatically reframes the approach to cancer detection, predicting disease development accurately before it takes hold.

Building on this novel approach, Harbinger is navigating through vast amounts of genetic data to develop simple blood tests that accurately pinpoint the presence and location of cancer. These tests would expand access to reliable cancer screening technology and deliver clinical informativeness to help transform a cancer diagnosis from something to fear into something to manage.

Genetics, a cornerstone of biology and medicine, is instrumental in the drug discovery process. However, with just one million human genomes sequenced, we’ve only explored a fraction of potential human genetic diversity. Novel links between genes and disease are constantly emerging inside every tissue — yet they have remained largely invisible to traditional genetics approaches. If we could map every possible genetic change to its impact on disease, we could create a complete genetic picture of each individual, greatly accelerating drug discovery.

The prevailing assumption that we each have one genome is off by a trillion-fold. Due to somatic mutations, each of the 30 trillion cells in our body carries a different sequence of DNA, akin to a massive genetic library comprising millions of copies of every possible change to our genome. As a consequence, each cell experiences disease differently. Some cells carry mutations that protect from disease and others carry mutations that cause or increase vulnerability to disease. It is as if ongoing clinical trials for these mutations are being conducted at the cellular level. Linking the functional outcomes to genetic information, reveals new opportunities to cure, prevent, or reverse disease.

Recognizing this vast untapped potential, Quotient has developed the world's first and only Somatic Genomics platform, designed to map cells' DNA to function at incredible sensitivity, revealing a new landscape of genetic diversity that goes beyond our current understanding of genetics. This new frontier enables researchers to identify novel disease-modifying drug targets directly in humans with increased efficiency, precision, and confidence compared to alternative genetics approaches.

For example, Quotient has discovered drug targets that treat a disease called metabolic dysfunction-associated steatohepatitis (MASH), which is characterized by high lipid accumulation in the cells of the liver. In regions of a diseased liver that exhibited low fat build-up, Quotient discovered somatic mutations that yield resistance to disease. By associating these genetic changes with the altered disease biology, Quotient has identified potential targets for drug development.

Formulating effective drugs requires not just identifying and modulating elusive biological targets, but also precisely triggering specific actions and outcomes. This level of control is often difficult to achieve because of the intricacy and unpredictability of biological interactions. Targets may be difficult to access and their behaviors hard to anticipate. These obstacles often lead to drug development processes that are resource-intensive, uncertain, and lengthy, often culminating in experimental medicines that either fail to activate the desired function or produce unintended consequences.

Mimicry is a powerful strategy that organisms have evolved to achieve significant survival advantages. By copying specific features of other organisms, species can deter predators, attract mates, or adapt to environmental challenges. Consider the Owl butterfly, which mimics the eyes of an owl on its wings. By replicating this particular feature, the butterfly can evoke the presence of a larger predator, deterring threats and enhancing its survival. Mimicking just this critical component achieves the desired function.

The power of mimicry can be applied at the molecular level to develop therapeutics that mimic molecular functions in the human body. Metaphore is replicating key molecular features to create medicines that drive specific biological actions. By mapping the molecular landscape at high resolution, Metaphore generates numerous sequence variants that imitate the essential functional characteristics of targets. This approach integrates multiple binding capabilities into a single antibody, allowing the molecule to bind to multiple targets while maintaining a monospecific structure. By leveraging one of nature’s oldest tricks, Metaphore is simplifying complex biological problems and enhancing the predictability of drug behavior to reduce the uncertainty traditionally associated with drug discovery.

Chronic diseases, such as inflammatory and autoimmune disorders, present a unique challenge due to their complex nature and need for long-term management. Existing approaches to develop small molecule treatments for these diseases are challenging and have high rates of attrition, producing few medicines that are efficacious over the long term without adverse side effects. With six in ten U.S. adults living with chronic illnesses, a new approach is urgently needed to introduce effective, safe, and widely accessible drugs.

Foods, herbs, and traditional medicines are rich with bioactive compounds that can exhibit a range of therapeutic effects. For instance, the salicylic acid in aspirin was originally derived from willow bark, a traditional remedy for pain and inflammation. We have been consuming these natural sources for millennia and numerous lifesaving therapeutics have been serendipitously identified from these sources, yet we have not developed a universal method to search this chemical space for potential medicines.

These molecules harbor a wealth of untapped starting points for effective and safe drugs that can engage hard-to-drug, complex biology with precision and selectivity. This range of molecules are far more structurally diverse than typical synthetic chemical screening libraries, allowing for a broader range of possibilities to interact with complex biological targets.

Fueled by the exponential growth in biochemical data and powerful computational tools, Montai is decoding the intricate language of this richly diverse chemistry. Using AI to decode the structure-function relationships of any molecule designed by nature, Montai can efficiently predict its potential to specifically and effectively target biological pathways that drive chronic diseases. The company leverages its advanced computational tools to characterize and systematically mine its proprietary, curated database of one billion (and growing) largely untapped bioactive molecules to select the optimal starting point for new medicines. This methodical yet efficient approach to early drug discovery greatly expands the range of highly promising, drug-like small molecules for proven pathways — unlocking broader access to effective therapies for chronic diseases.