Even though computers have been getting faster and faster over the past 75 years, there’s still room for an exponential increase in speed. This boost should make it possible for machines to perform tasks that are impossible today, with particularly powerful applications in medicine and biology. To achieve it, the trick will be to make computers weirder.

Unlike conventional digital computers that process data as discrete 0s and 1s, quantum computers have bits whose values are fluid: essentially, they can be 0 and 1 at the same time. This is because the bits are made out of things that are so small—including individual atoms or electrons—that their behavior is defined by the oddities of quantum mechanics. One of these strange properties is superposition, where various possible states of a system exist at the same time. Another bizarre property is entanglement, which is when two particles act identically and a change to one also changes the other. Because quantum bits, known as qubits, have both superposition and entanglement, a quantum computer can calculate with many complex variables at the same time. Exponential power takes hold quickly. Each qubit can represent two states at once, so two qubits can represent four states (22), three qubits can represent eight (23), and so on. Just 64 qubits have the potential to represent 264 states at once: more than 18 billion billion.

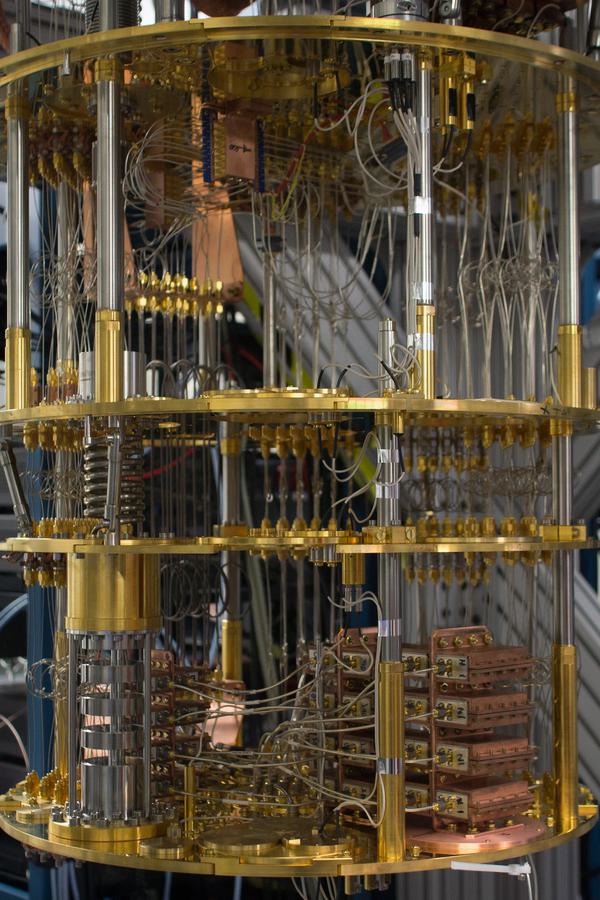

Quantum computers were first proposed about 40 years ago, but they are only now becoming real machines. Making and controlling quantum computers has been elusive because their quantum weirdness arises from conditions that are hard to maintain. The most common way to produce these effects is to keep qubits extremely cold—a tiny amount above absolute zero—and they have to be shielded from electromagnetic interference. Because qubits are so fragile—they easily “decohere” into a state that doesn’t have quantum properties—their calculations are prone to mistakes. The results must be run through error-correcting algorithms, some of which require quantum calculations of their own. That uses up a sizable chunk of the machine’s processing power.